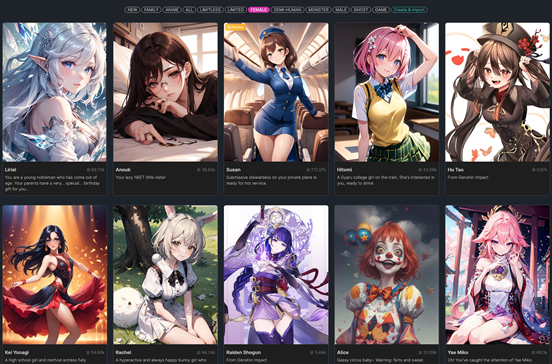

AI Systems Fail in NSFW Detection?

How Accurate are AI Moderation Tools?,?,

There has been an upsurge in the usage of Artificial Intelligence (AI) against Not Safe For Work (NSFW) content detection, as the digital platforms remain vigilant in keeping themselves safe, and professional. Nonetheless, the AI systems powering these tools are also capable of error. This underpins the importance of knowing the frequency and manner of these failures so that their failure rate can be better controlled, and their effectiveness increased.

Current Performance Metrics

House said that recent research shows NSFW classification with modern AI systems should yield an average accuracy of between 85% to 90%. This statistic means, the 5-10% of the content are potentially false positive/ or false negative. These errors can curious from many quarters, including but not limited to poor training data, the nuanced nature of context, and the rapidly changing landscape language and imagery used in digital communications.

Context is Key: The Problem of False Positives

It is inaccurate when aML system reports false positives as inappropriate content. This would lead to mistaken classification of work-safe medical or educational content with, for example, images containing the words 'anatomy' in them as offensive. Around 2023, 12% of the content tagged by AI as NSFW in industry data will be a misclassification that the platform did not specifically reach. This over-sensitivity might unnecessarily protect something from being censored and then also limits valuable information exploding out.

False Negatives: Failing to Hit the Target

False negatives, on the other hand, are logical cases where AI does not tag real NSFW content as such. This may be especially harmful, as these failures enable inappropriate content to linger on platforms, subjecting users to offensive materials that have no place in the modern digital age. According to the reports, AI system, generally, overlooks about 8% from authentic NSFW content, a great deal of these are on the grounds that the AI is sufficiently not shrewd to recognize it, similar to individuals utilizing cunning hidings or exhibiting little accents of words it can't see.

Improving Detection Accuracy

Significant improvements in machine learning algorithms paired with training data sets are required to reduce both false positives and false negatives. Improved training data quality and diversity will enable AI systems to better understand this complexity and to more easily understand human language and imagery across different cultures and contexts.

Deployment of AI with Ethical Considerations

One should also take into account the ethical issues of deploying a NSFW detection AI. A top priority is ensuring that these systems are transparent and respectful of user privacy requirements. Users must be able to question AI decisions, facilitating feedback which can be used to further improve AI accuracy and fairness.

Future Directions

Going forward, the goal is to come up with AI systems that can adjust much more quickly to new genres and new forms of content. These will require continuous learning models that need to be updated with every new case, so as to reduce the false positives as well as the false negatives.

Conclusions: A work in process

AI systems for NSFW filtering are not perfect. They have respectable accuracy now, but they could be much better. This is how developers can improve these systems to be more reliable and trustworthy by addressing the reasons of AI failures.

Visit the post with more details about the nsfw character ai development and challenges. AI will improve over time in its capacity to handle all sorts of NSFW content responsibly and ethically on digital channels and platforms adjacent.